The Linux & Pi Experience

Intro

It took long enough, but I finally managed to set up a blog site! Now I get to yap about just about anything (probably mostly be about tech) and post it all online, all on a tiny site I maintain.

The idea of having an internet blog came about when working on a side project in Python/Flask. While still figuring out ways I could deploy it to the internet once completed, I thought to myself, “wouldn’t it be a good idea to document my development process and what I learned for others in my field to see?” And with that, we are here.

But anyways, to tie that all into the topic of Raspberry Pis… that’s the computer I used to get this repository/site up and running! And that’s coming from someone who uses Windows on a day-to-day basis. It’s hard to think of a more appropriate first topic to talk about than not only my process setting up this site, but how I even started using Linux in the first place.

The first stepping stones

My usage of a Raspberry Pi - and Linux systems in general - goes back to around freshman year of college. Second semester students in my university’s computer science department are required to take a Systems Programming class, which covers lower-level programming principles using C, and fundamentals of Unix-like operating systems. For the latter, concepts covered included the bash shell, git version control, and the vim/nano text editors.

What in the world is this?!

At the start of the semester, I was already incredibly adjusted to Windows, having worked almost exclusively with operating systems within this line. I didn’t even really know what made Linux stand out so much - why even think of switching when any task that needed a computer could be done on Windows - the most used OS in the world, with a relatively user-friendly interface? Who wants to spend twenty minutes just installing Firefox and a bunch of drivers on a Linux system?

And with more specific programs like bash and vim, it felt like there was so much I was taught that I wouldn’t ever use. Who in the world would use a program as antique and clunky as vim, when VSCode and heck, Windows Notepad, exist? I mean be real, :q! to exit with unsaved changes after making a mistake that’s too annoying to fix without a do-over? I didn’t even know what the point of learning globs and wildcards was; both were just confusing jargon to memorize at the time. My overall point being, why fix what’s not broken?

Now to re-iterate, just about all of this was from freshman me, who if you hadn’t already figured out yet was at the “Mount Stupid” point of the Dunning-Kruger curve:

Source: Wikipedia

Eventually, after completing the first part of the course, we moved onto what I considered the “fun” part of the class - the programming projects. This is where I started to appreciate Linux and its associated programs much more.

First Pi projects

When I took the course, we were allowed to use a Raspberry Pi 3 Model B for the projects, but it wasn’t a requirement due to the supply chain issues at that time stemming from COVID. Lucky for me, my grandfather had a bunch at his house, so I asked for one and set it up using an image of Linux specifically made for the course. I additionally had to procure a Sense Hat, an 8x8 RGB LED add-on.

The first project we were assigned was simply to put our first and last initials in either a terminal or the Pi sense hat. I had to include a header file custom-made by another professor at the school, which interfacing with the sense hat through C functions. Other than that, the code wasn’t all that exciting. It was just a ton of this:

1bm->pixel[6][0]=WHITE;

2bm->pixel[5][0]=WHITE;

3bm->pixel[4][0]=WHITE;

There were also a few clear frame buffer function calls sprayed around, and a free frame buffer call at the very end. All I understood at this point was the array assignment operations.

Using vim was still somewhat painful at this time; getting used to navigating around the file with the arrow keys was certainly a task. I think there was a point where I just ended up trying to type my code in VSCode and then echoing into the C file in the class remote server. But, for reasons, it didn’t work out very well, so I just had to endure and get used to vim. beats nano, am I right1

For these projects, we were also tasked with pushing our code to a git repository used by the professors/TAs to grade our assignments. Before this class, I used GitHub Desktop for my own side projects, and worked well enough. Getting adjusted to git in the command line was pretty easy, and was a sign of things to come in regards to my learning. I found it so much easier to perform operations in the terminal; I was definitely a bit quicker typing git add ., git commit -m "message", etc. than navigating around the desktop interface and clicking buttons everywhere. I’d later find that entirely new operations that would benefit me later down the road would be easier to access through here, such as git blame and git diff. Needless to say, no going back here.

After this, we were tasked with creating a clock… in binary. How this worked was that we had to display a time in hh:mm:ss format, with each of the digits replaced with little “dots” representing each number in binary. I remember this project fondly because it was the first time I had to ask someone for help on it, and it was regarding converting decimal to binary.

Other than that, this was the first time I was exposed to both shell scripting and Makefiles. The shell script was pretty basic: it just outputted the time every second and I had to pipe its output into the executable that displayed the time:

#!/bin/bash

myvar=1

while :

do

date +"%H:%M:%S"

sleep 1s

done

It was at this exact moment that piping made a lot more sense. The revelation that you could just transfer the output of any program to another was a fascinating one, and was definitely one I used a fair amount since this course. One of the most intriguing applications of it I found was with the cowsay and fortune commands:

$ fortune | cowsay -f eyes

_____________________________________

/ Someone whom you reject today, will \

\ reject you tomorrow. /

-------------------------------------

\

\

.::!!!!!!!:.

.!!!!!:. .:!!!!!!!!!!!!

~~~~!!!!!!. .:!!!!!!!!!UWWW$$$

:$$NWX!!: .:!!!!!!XUWW$$$$$$$$$P

$$$$$##WX!: .<!!!!UW$$$$" $$$$$$$$#

$$$$$ $$$UX :!!UW$$$$$$$$$ 4$$$$$*

^$$$B $$$$\ $$$$$$$$$$$$ d$$R"

"*$bd$$$$ '*$$$$$$$$$$$o+#"

"""" """""""

Makefiles, however, were where things started to get very interesting for me. Manually compiling everything using gcc felt tedious, especially when linking objects was involved. Being able to automate that part of the development process was a total game-changer! I wish I used it in future systems-level programming classes I took, but it’s never too late to dive more into it in my own endeavors.

Then came the final project in the course. It was similar to the first one I worked on, displaying initials on a sense hat, but this time, we had to make them scroll and wrap around… the horrors! For many of my friends, this was the hardest project, but for me, it somehow just clicked. I frankly don’t even remember how. That said, I think getting the scrolling logic to work with modulo and the sense hat API took me some time.

Maybe it’s not all that bad…

I ended up doing pretty well in that class. Genuinely found it fun in the end, especially with the really supportive professor I had who was never short on wisdom regarding lower-level programming, and I had become so much better at using Unix tools.

I didn’t know it at the time, but I would go on and use them a lot more in academic and professional projects, starting with the internship I had the summer following it. I used bash a lot more there, and to be totally honest, I strongly preferred the commands used in Unix shells over those used in Windows. I think the commands just make a lot more sense in the former, such as rm to remove a file or folder (compared to del and rmdir in Windows). Oh, and having the current git branch displayed every line in the terminal while working on a large codebase was convenient, if not a bit trivial:

sbrug@Desktop MINGW64 ~/Documents/GitHub/spotify4u (master)

Fast forward a couple months after the course finished, I hadn’t even realized it, but I was so accustomed to navigating around the terminal and using command-line text editors like vim. I remember at the start of the semester, I had so much trouble trying to just navigate around a file in vim. Now it felt a lot more graceful using it, and at times I even resorted to it to write small scripts in Python and shell. It was just so much more convenient than opening up VSCode, opening up the folder I needed to use, then opening up an entirely new terminal window there. It felt more graceful in general just to navigate around my files using a terminal compared to a desktop UI.

img2pi: Image to sense hat

Between projects in that course, I put in the time to work on a side project involving my Pi, riding off the momentum I had completing one of them. I decided to challenge myself a bit and write a program to display a (very, very shrunken down) image file on a sense hat. Due to the resolution effectively being 8x8, it would have to be a very simple pattern for it to work best.

I started by writing a Python script to resize the image and write the colors at each pixel to a file:Python code

from PIL import Image

import os.path

def find_occurences(string, char):

return [i for i, letter in enumerate(string) if letter == char]

def main():

# resize the image to 8x8 (resolution of Pi sense hat)

imageLoc = input('Enter the location of the image > ')

img = Image.open(imageLoc)

result = img.resize((8, 8),resample=Image.BILINEAR)

result.save('result.png')

img = Image.open('result.png')

imgFinal = img.convert('RGB').load()

# get colors from image, put in file

width, height = result.size

openMode = "x"

if os.path.exists('r.txt'):

openMode = "w"

red = open('r.txt', openMode)

openMode = "x"

if os.path.exists('g.txt'):

openMode = "w"

green = open('g.txt', openMode)

openMode = "x"

if os.path.exists('b.txt'):

openMode = "w"

blue = open('b.txt', openMode)

for i in range(width):

for j in range(height):

colorString = str(imgFinal[i, j])

commas = find_occurences(colorString, ',')

red.write(colorString[1:commas[0]] + '\n') # don't include "("

green.write(colorString[commas[0] + 2:commas[1]] + '\n') # don't include comma

blue.write(colorString[commas[1] + 2:-1] + '\n') # don't include ")" or comma

red.close()

green.close()

blue.close()

main()

I had to resort to using this option instead of automatically parsing the colors in the image using C… it was a bit complicated for someone my skill level, not to mention I was unfamiliar with scp at the time. This was also my first time using PIL for image manipulation, a very useful library which I would end up using a lot more in the future.

Then I wrote a C file to read from those three color files (representing R, G, and B) and interface with the sense hat:C code

int main(void) {

char* reds = "./r"; // the text file containing all red colors

char* greens = "./g"; // the text file containing all green colors

char* blues = "./b"; // the text file containing all blue colors

FILE* redFile = fopen(reds, "r");

FILE* greenFile = fopen(greens, "r");

FILE* blueFile = fopen(blues, "r");

char line[256];

int red[64], green[64], blue[64]; // there are 64 tiles to fill

int i = 0;

while (fgets(line, sizeof(line), redFile)) {

red[i] = atoi(line); // convert char* to int

i++;

}

fclose(redFile);

i = 0;

while (fgets(line, sizeof(line), greenFile)) {

green[i] = atoi(line);

i++;

}

fclose(greenFile);

i = 0;

while (fgets(line, sizeof(line), blueFile)) {

blue[i] = atoi(line);

i++;

}

fclose(blueFile);

// these lines come from the 'sense.h' library

pi_framebuffer_t *fb = getFrameBuffer();

sense_fb_bitmap_t *bm=fb->bitmap;

clearFrameBuffer(fb, 0x0000); // make the whole sense hat board black

// nested loop goes here which assigns the colors to each tile

int j = 0;

for (int i = 63; i > 0; i--) {

uint16_t color = getColor(red[i], green[i], blue[i]);

int x = j % 8;

int y = (int)(j / 8);

// these lines utilize functions included with 'sense.h'

bm->pixel[y][x]=color;

j++;

}

return 0;

}

It was a pretty small project overall, and quite frankly it was ineffective at displaying images well (no surprise there), but I was super satisfied at how it turned out, and how much I learned from it. Like the other projects I did for the course, it was very, very satisfying getting a program to work between different computers.

Other cool classes

The time between the next sectiom and the last section is about two and a half years so I’m going to have to plug the gap first.

Since that spring, I took several systems-level programming courses: Data Structures, Machine Organization & Assembly, Operating Systems, and Parallel Programming. From working with POSIX functions for multithreading and scheduling, to using CUDA to run parallelized programs, I explored many different regions of low-level programming, and it quickly became an area of interest for me. By this point, I had adjusted my thinking enough to feel comfortable working more directly with memory, and it felt genuinely satisfying to be able to have that much control over it through my programming.

For my Operating Systems class, I worked with a group to design an operating system simulation, covering job scheduling, management of resource requests, and deadlock avoidance

For one of my Parallel Programming projects, I had to use C and CUDA to parallelize an algorithm that computes fractals in different resolutions

Oh, and it took me three courses to be able to completely grasp pointers and references! That was fun.

Return to Linux

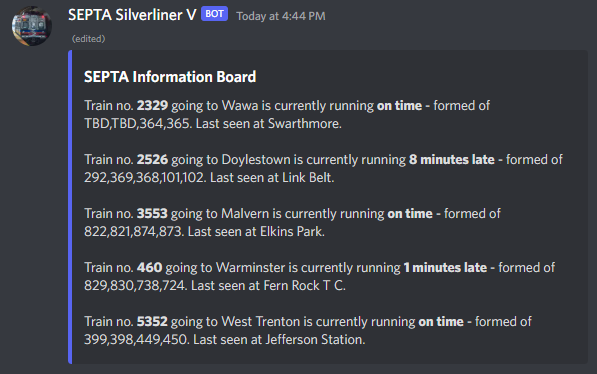

Near the end of summer 2024, I started tinkering with using my (then) 2+ years-unused Raspberry Pi 3 to host a Discord bot I made to track my SEPTA trains to and from university. I wrote the bot three years before then, but had it hosted on a remote server a friend of mine from the UK spun up. As grateful as I was that he let me use it, I wanted to learn how to set up something like that myself, so what else other than my Pi gathering dust would be more up to the task?

The main display of delays of certain tracked trains. I found this to be an easier way of tracking my trains than the official SEPTA app, and that's saying something

So I took my Pi out after doing a deep clean of my room, looked around the house for a micro-SD card reader, and installed Raspbian OS on it. It took me a little while, but getting it all hooked up to my home network and being able to SSH into it was a very accomplishing feeling. As expected though, it was pretty light in terms of hardware so my programs ran pretty slow compared to on the aforementioned VM. But for me, it was all about the feeling of doing something completely on my own.

If my previous academic projects were what made me really appreciate the bash shell, this experience was what made me like Linux a lot more - in some cases, even more than Windows!

Minimalism is cool (for once)

I really, really love how minimal and - for lack of a better word - “transparent” Linux is.

It just comes with the essentials; if you need to use it for anything else, it’s on you to get things installed. Many who have gone through a similar curriculum as me should probably know by now that Windows is filled to the brim with bloat, and that to make any changes that remotely involve the system itself is at best annoying, and at worst downright near impossible. Linux will literally let you uninstall the bootloader if you wanted to - after all, the OS is totally free! What have you to lose (besides your precious time)?

For example, Raspbian being a variation of the Debian distro, comes with apt-get, which is used to manage packages. I had to go looking around to know what it can really do and just how powerful it is at its task, but I quickly came to realize that the sheer convenience of installing software it brings is unparalleled.2 Seriously, it beats going through the tedious process of constantly clicking “Next” in an *.msi installer, or even worse, dealing with Windows’s UAC.3 Just run the command as root with whatever library you need, type in Y, and you’re set.

It was also cool to see how easily you can access the system files in Linux - not that you’d regularly have to, but if you have the ability and need to, you absolutely can.

Overall, it just feels like with Linux, you are truly able to know what really is on your computer and what it does, such as what runs on startup and how healthy those services are, and your installed software and all of their dependencies. Pretty nice how smart it makes you feel.

I mean look, everything is right there!

Upgrades, people, upgrades

Eventually I spoke to my grandfather about how I was using my Pi, and some ideas I had going forward, such as getting Pihole working to serve as a network-wide ad blocker, and maybe dabbling with some self-hosted projects like a home cloud, backup server, and knowledge center. The major thing holding me back was the limited resources on the Pi 3, which couldn’t run Docker containers for most of these projects even if it tried.

We spoke on and off about it and I was pleasantly surprised to be given a Raspberry Pi 5 from him! Being the newest model, it had 8GB of RAM compared to the 1GB my old Pi had. I was super eager to see what more I can do at a time with a more powerful machine on the palm of my hand (quite literally).

systemd, at your service

The first thing I wanted to do was to find a way to keep my SEPTA bot perpetually running, even after an API error (because SEPTA’s API does go down occasionally) or a system crash. That meant mitigating the task of…

- opening up RealVNC viewer to SSH into the Pi in a desktop view

- launching a terminal window manually

- running the build/run scripts

- making sure that terminal window stays up

…and moving it into a contained systemd service. This would allow me to not only run custom services after the Pi boots up, I can also readily access the program’s logs at any point in time to monitor its status, and even go way back to when it started to check past events. And best of all, systemd services will automatically restart if they crash!

After messing around way too much with node and some other dependencies, I wrote up a service file of my own to accomplish just that:

sbrug@windragon:/etc/systemd/system $ cat septa.service

[Unit]

Description=Run SEPTA bot on startup

After=network.target

[Service]

Environment="PATH=/home/sbrug/.nvm/versions/node/v22.6.0/bin:/usr/bin:/bin"

ExecStart=/home/sbrug/Documents/septa-checker-v2/start.sh

WorkingDirectory=/home/sbrug/Documents/septa-checker-v2

StandardOutput=journal

StandardError=journal

Restart=always

[Install]

WantedBy=multi-user.target

To break it down, in the Unit section, I input a description to describe the service, and state that it should run after connecting to the internet (necessary since not only will all essential services be running by now, but the bot accesses Discord, of course). The WantedBy field specifies that the service should be linked to the startup process, with multi-user.target meaning it should run in multi-user mode.

In the Service section, I specify that the target file to execute is a shell file to handle starting up the bot. More specifically, that handles building the project and then launching it to Discord. It’s a stupid and short file but it works is all that matters:

#!/bin/sh

while true; do

/home/sbrug/.nvm/versions/node/v22.6.0/bin/npm run build

/home/sbrug/.nvm/versions/node/v22.6.0/bin/npm run start

done

This part also specifies that the service should output any errors and other output to a “journal” like the one below. As you can see, I can readily check the status with a single command:

sbrug@windragon:/etc/systemd/system $ sudo systemctl status septa.service

● septa.service - Run SEPTA bot on startup

Loaded: loaded (/etc/systemd/system/septa.service; enabled; preset: enabled)

Active: active (running) since Thu 2025-02-20 11:40:32 EST; 3 weeks 2 days ago

Main PID: 859 (start.sh)

Tasks: 24 (limit: 9573)

CPU: 16min 20.874s

CGroup: /system.slice/septa.service

├─ 859 /bin/sh /home/sbrug/Documents/septa-checker-v2/start.sh

├─72935 "npm run start"

├─72946 sh -c "ts-node -r dotenv/config ./prod/index.js"

└─72947 node /home/sbrug/Documents/septa-checker-v2/node_modules/.bin/ts-node -r dotenv/config ./prod/inde>

Mar 13 16:50:10 windragon start.sh[34238]: }

Mar 13 16:50:10 windragon start.sh[34238]: }

Mar 13 16:50:10 windragon start.sh[72916]: > build

Mar 13 16:50:10 windragon start.sh[72916]: > tsc

Mar 13 16:50:12 windragon start.sh[72935]: > start

Mar 13 16:50:12 windragon start.sh[72935]: > ts-node -r dotenv/config ./prod/index.js

Mar 13 16:50:15 windragon start.sh[72947]: Loading actions...

Mar 13 16:50:15 windragon start.sh[72947]: Ready!

lines 1-20/20 (END)

In the worst case scenario, this will also output any errors and the reasons they occur, making it very easy to track and fix them.

I am speed

The biggest change I noticed going from a Pi 3 to a Pi 5 is how BLAZINGLY FAST terminal commands in Linux run.

I noticed a little bit of that sitting with some college friends who use Apple systems. When I TA’d a Software Engineering class in my junior year, I often worked with students to mitigate version control problems. I manually entered in a bunch of git commands, and it was genuinely shocking to me how fast they ran compared to on my Windows laptop.

On Windows, I would always notice a tiny bit of overhead between sending a git command and it actually executing. This was the case especially with push, where it waited anywhere between a split second and a full second before pushing changes. Not that this was terrible or world-ending, but seeing others’ systems do it near instantly made me realize that wasn’t really the norm.

On Debian, almost every push I ran executed almost immediately after sending the command.

Pretty sick stuff.

A new coding environment?

When I upgraded my hardware, I didn’t think too much about moving away from Windows for coding, but this next situation pushed me in that direction.

In the fall of my senior year, I was taking a class in Computer Vision. As much as the subject interested me, being very prevalent to the modern world and the technologies that surround us, I was bored out of my mind moving through a bunch of lecture slides and coding Python programs to perform random transformations on an image of butterflies. So I decided to take things into my own hands during Thanksgiving break and develop a side project in the field that aligned with my own interests.

The result was a train activity detector, which takes in a YouTube livestream of a railroad-side camera capturing day-by-day train movements and automatically extracts clips of trains in motion using the OpenCV Python library.

A demonstration of the script

I’ll post more about this, and a more detailed description of the development process, in another blog post, but for now, I’ll focus on what this has to do with Linux.

The intention was that I’d have this program running on my Pi 24/7, automatically extracting clips and uploading them to some sort of database or website for others to view. As a result, I had to make use of subprocesses to launch yt-dlp and capture portions of a stream for the script to process. It would then save the resulting video, and run another Python script to use OpenCV and detect train movements.

The only issue being that Windows wouldn’t even make it to the second part!!

try:

output_name = 'live-' + str(time.time()) + '.mp4'

command = ['yt-dlp', url, '-o', output_name]

process = subprocess.Popen(

command,

stdout=subprocess.PIPE,

stderr=subprocess.PIPE

)

# sleep for the duration of the vid we want to save

for i in range(60*30):

time.sleep(1)

# Check if we're on Windows

if psutil.WINDOWS:

process.send_signal(signal.CTRL_C_EVENT)

else:

process.send_signal(signal.SIGINT)

result_queue.put(output_name)

except KeyboardInterrupt:

pass

Yes, this code is janky. And yet it doesn’t even work well on Windows still…

To break it down a bit, after capturing 30 seconds of stream, I want to break the yt-dlp process, so the program can save the capture as an mp4 for the next step in the pipeline.

The SIGINT interrupt signal is designed exactly for a situation like this, and essentially simulates a Ctrl+C keypress therefore terminating the process. The problem with this, however, is that SIGINT behaves differently on Windows versus a POSIX-compliant OS, such as Linux. On Windows, SIGINT is fired from an entirely different thread created by the system. As a result, instead of terminating just the subprocess I created within this program, it terminates the entire Python script. However, it works entirely fine on a Linux system.

The intent in the end was to run it on my Linux system, but this entire ordeal made it all the more painful to test locally. And it made me realize that in a handful of areas (to put it generously), development in Windows is more limited than on Linux. Eventually, I put more effort into switching over to Linux for development; this was just the catalyst. I’ll describe the actual switch to my Pi for coding in one of the next sections.

tl;dr:

Seriously, it's 2025. Why?? Source: /r/programmerhumor

ssh: Securing the system

Fast forward a couple months, I finished my last “hard” semester of college, Christmas came and went, and I obtained my AWS Cloud Practitioner certification. I didn’t spend much time working on my Pi around this time, besides experimenting a bit and trying to run a Minecraft server for my friends from college. Ended up abandoning that though, and just borrowed an Oracle VM instance my friend spun up to run it.

If I wanted to do anything more exciting with my Pi, I figured I should probably make it accessible outside my home network. The only concern was that exposing it publicly would be a security risk due to factors like port scanning, and the fact that - well - it is literally opening up my home’s network. So I had to find a way to make sure access to my Pi is secured as well as possible.

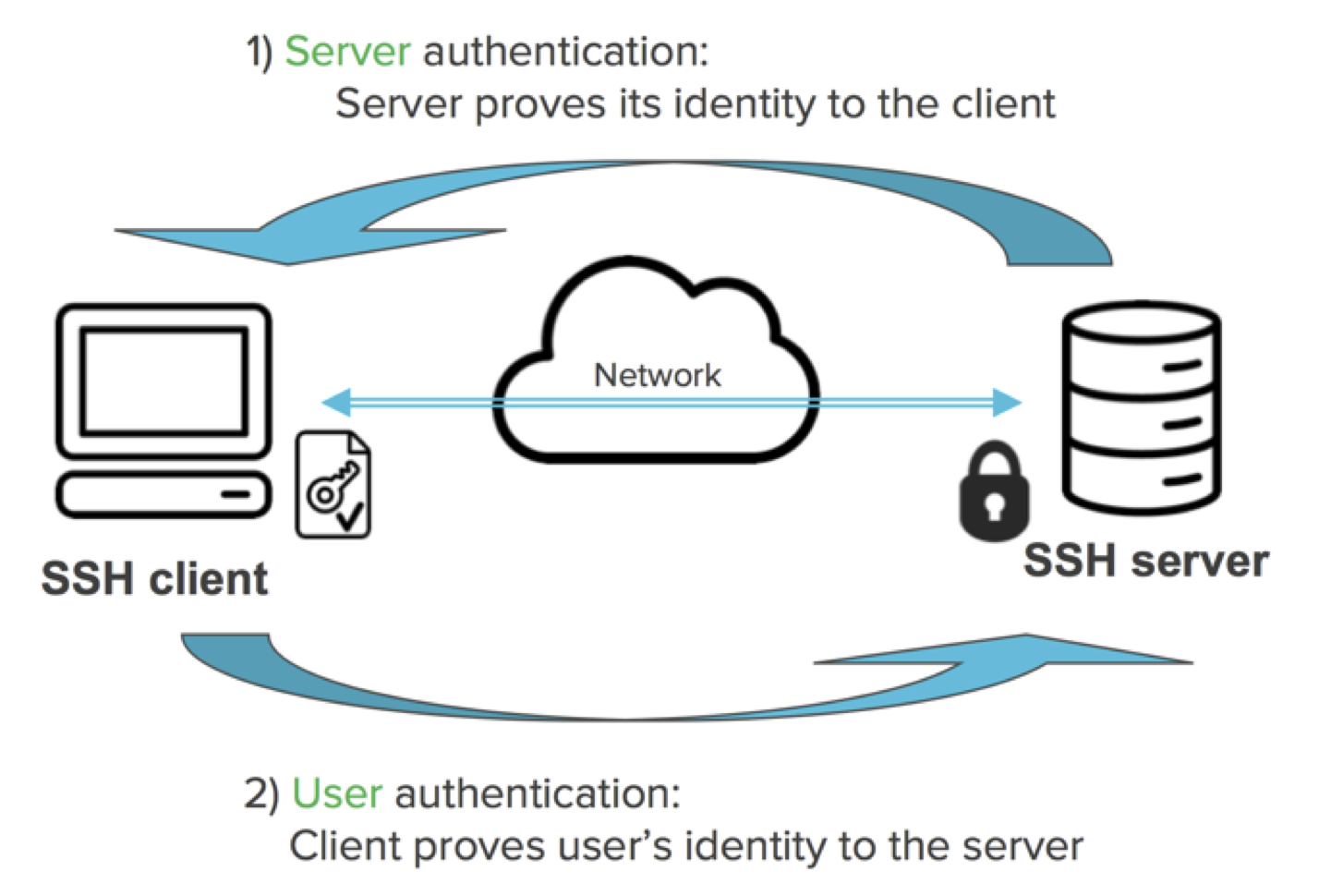

What I did first was set up ssh keys on my Pi. To provide a simplified explanation, these are essentially 2048-character long passwords. More specifically, these work with pairs of public and private keys.

The public key is stored in the target machine’s (the server’s) ~/.ssh/authorized_keys file. The best way to think about is this is the lock. It’s publicly accessible, but does nothing unless you have your own key to unlock it.

The private key is stored on your own machine. It’s like a unique key; it is private (unless you decide to share it with someone else, which frankly, you shouldn’t) and is exactly what you need to unlock the lock, or the public key in this case.

This surprisingly took me a long while to understand, even after internships where I had to work with ssh-keygen. But it’s never too old to learn :-)

A visual showing how ssh keypairs work. Source: ssh.com

I initially used a little tool called PuTTygen to generate my keys, and it did work for authenticating into my Pi, but its shortcomings were a lot more visible later on. Of note, was how much longer it ended up taking, because of this:

The program waits until you move around this area enough. Source: pimylifeup.com

For an understandable reason of course, but it struck me as a little funny.

ssh-keygen, on the other hand, is a lot faster and achieves the same functionality; it also generates the keys in OpenSSH format, so you can use them for any service that requires specifically that kind of key. All you do is enter a name and optionally a passphrase:

sbrug@windragon:~ $ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/sbrug/.ssh/id_rsa): hello_world

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in hello_world

Your public key has been saved in hello_world.pub

The key fingerprint is:

SHA256:5P/3dpwY883VXaRyykXXvqU+RciSYxIjV+zpA/ha4x4 sbrug@windragon

The key's randomart image is:

+---[RSA 3072]----+

| o. .|

| . + . . +|

| .+ + = * |

| o. o O * =|

| S. * B o*|

| .+ =o..=|

| +E. o=++|

| . .o .+o*|

| .. .. +o|

+----[SHA256]-----+

sbrug@windragon:~ $

I was always curious about what randomart is - it allows humans to compare host keys in a visual manner, instead of using lengthy fingerprint strings.

The remote code server

With some time on my hands after finishing setting up my ssh keys for logging in, I realized I had to do the same for my GitHub repos, as password authentication was deprecated sometime back. I use https to clone repositories on both my Windows computers, but I figured this would be a good time to learn how to do the same through ssh. Even though I went through almost the exact same process to do this, it ended up taking a bit longer. By this point I used ssh-keygen instead of PuTTy to accomplish this, but GitHub would only allow keys named id_rsa and a couple others to be used. So I can’t use a more clearly named git key to do it? Sheesh.

Once I got that working, I got sshing from VSCode to work, but this required some work on the Windows end… for some odd reasons. I had to deny permissions to every admin user to access my ssh config folder, leaving only my account able to access it. That was weird, but ultimately I got things to work!

Tailscale

With the ssh keys set up, the security of my Pi was improved, but I could still only access it from my home network and not outside. The obvious remedy to this would be, again, to expose it to the public network, but the most obvious way is not exactly the best one, in this case for security reasons.

What I decided to do instead was set up Tailscale to serve as a “tunnel” between my Pi and my laptop and phone, allowing me to connect to it from anywhere I have internet in a secure manner. The free plan for this service is also superb, allowing for up to 20 devices to be connected in one network at a time. It was also incredibly easy, seeing as I got it done in about 15 minutes.

How the blog came to be

Now we’ve finally hit the point where I can talk about setting this project up, whew.

Initially my intent was to build the blog using Jekyll, since I was already somewhat familiar with it through a separate project’s documentation site. It’s a simple static website generator that makes it easy to deploy markdown based sites. I wanted to challenge myself this time though and use a Linux environment to set it up, instead of Windows like usual.

However, I quickly ditched that direction as the dependencies - notably Ruby and the bundler gem - were giving me so much hassle. It was as if everything Ruby-related couldn’t be written to at all, so in spite of following tips online, I had to sudo my way through everything. And in some cases, not even that would work. In the end, I decided to take an alternative route.

That’s how I ended up on Hugo, another open-source static site generator. Compared to Jekyll, it was much easier to set up. The first step was to get Hugo installed, though the issue I faced was that the package on apt-get was wildly out of date; I ended up having to procure a debfile with the latest version, and installing it with dpkg -i.

Rest of it was pretty smooth sailing - I ended up learning how Git submodules worked, though ultimately I ended up just cloning a theme repository instead for customization reasons. I used imigios’s “not-much” theme, credited on the footer of this site, and changing the font, colors, and layouts slightly to match my main site.

Soon enough, everything was done, and I was able to start writing! Hugo’s speed is nothing to goff at, I can run the hugo server command it will - quite literally - IMMEDIATELY launch the server locally. It’s nuts, and another testament to Linux’s speed.

Closing words

I find it weird to think how I went from someone who saw no point in using Linux at all, to one who prefers it over Windows in many aspects. I’ve considered switching over to it on my desktop, but due to anxiety about incompatibility with my software and the possibility of things going wrong (especially seeing as my desktop is mainly a gaming rig), I didn’t find it exactly feasible to do so. Maybe I’ll consider it later down the road when I have a metric ton of time and energy to dedicate to it, and enough motivation to consider a fresh start.

Obviously, this doesn’t mean I’m looking forward to upgrading to Windows 11, because really, I don’t! It feels like a necessity…

But still, with my Pi, I 100% still appreciate any chances I have to use it. I’ve come to learn how fast it is at terminal operations, and how much control you really have over the system. Linux is absolutely a power user’s operating system and is in no way meant for the common user, since its learning curve is pretty steep (in my case, it required me to pursue a computer science degree). But if you have the patience and will to learn it, it will pay great dividends.

What’s next?

There are a fair few projects I want to get a start on with my Pi. The first being a backup server and home cloud, which I plan on buying a new external hard drive for. Currently, I use OneDrive to store files that I need on both my desktop and laptop, but a few factors, including the integration with Windows itself, its limited space, and a desire to move some stuff off a public cloud, have led me to consider self-hosting something of the sort instead. I’ve explored various options including NextCloud and even just using scp to move things around, but I’ll figure that out later, and document the process of it getting it set up in another post. In any case, even just having it plugged in and able to be accessed through just a shell is fine, since I’ll be using it as a warm backup of my files anyways. After all, a Windows 11 upgrade could go terribly wrong, or something crucial could fail…

I also do want to explore other self-hosting projects, such as a knowledge base, media center, and image storage, all also using my external drive. While I’m not sure if I’ll use them a ton, I do want the experience of setting them up on my own, and in a way the ability to say I have these things set up at my home.

One can hope that Linux will some day be on the same level as Windows in terms of native support for more intensive games and commonly used software like Office etc., but for now, it’ll do wonderfully as a home lab of sorts.

I still remember when I worked as a software developer intern going into my senior year, I was tasked with updating developer setup documentation. It felt good adding in vim instructions to the already existing nano ones. Gotta tell them about the truly superior text editor. ;P ↩︎

Except when that one package isn’t listed in apt-get’s repositories, for whatever reason. Thankfully, debfiles exist. ↩︎

Yet another footnote so I can rant about how annoying it is. Seriously, Microsoft, please let us whitelist programs for UAC!! I don’t need to see the prompt every time I launch a niche game that needs admin privileges to check online for updates… ↩︎